This is a brief summary of ML course provided by Andrew Ng and Stanford in Coursera.

You can find the lecture video and additional materials in

https://www.coursera.org/learn/machine-learning/home/welcome

Coursera | Online Courses From Top Universities. Join for Free

1000+ courses from schools like Stanford and Yale - no application required. Build career skills in data science, computer science, business, and more.

www.coursera.org

One implementation detail of unrolling your parameters from matrices into vectors, which we need in order to use the advanced optimization routines.

Our parameters are no longer vectors in neural network, but instead, they are matrices where for a full neural network we would have parameter matrices theta1, theta2, theta3, and gradient matrices D1, D2, D3.

"Unroll" into vectors

In Octave,

thetaVec = [Theta1(:), Theta2(:), Theta3(:)]; // this will take all elements in each theta matrices, and unroll them and put all the elements into a big long vector.

dVec = [D1(:), D2(:), D3(:)]; // Similarly, take all D matrices and unroll them into a big long vector.

Finally, if you want to go back to vector representation to the matrix representation, what you do to get back theta one say is take thetaVec and pull out the first 110 elements. and use the reshape command to reshape those back into theta 1. Similiar logic to theta 2 and 3.

Quiz: Suppose D1 is a 10x6 matrix and D2 is a 1x11 matrix. You set:

DVec = [D1(:); D2(:)]; Which of the following would get D2 back from DVec?

a. reshape(DVec(60:71), 1, 11)

b. reshape(DVec(61:72), 1, 11)

c. reshape(DVec(61:71), 1, 11)

d. reshape(DVec(60:70), 11, 1)

Answer: c

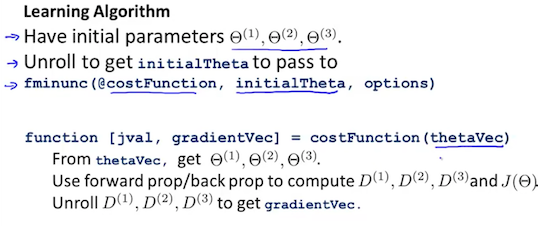

Unrolling idea to implement the learning algorithm.

Have initial parameters $\theta^{(1)}, \theta^{(2)}$, \theta^{(3)}$.

Unroll to get initialtheta to pass to

fminunc (@costFunction, initialTheta, options)

function[jval, gradientVec] = costFunction(thetaVec)

from thetaVec, get $\theta^{(1)}, \theta^{(2)}$, \theta^{(3)}$.

use forward prop/back prop to compute D1, D2, D3 and $j(\theta)$

unroll d1, d2, d3 to get gradientVec.

The advantage of matrix representation is that when your params are stored as matrices. it's more convenient when you are doing forward propagation and back propagation and it's easier when your params are stored as matrices to take advantage of the, sort of, vectorized implmentation.

Whereas, in contrast, the advantage of the vector representation, when you have like thetaVec or dVec, when you are using the advanced optimization algorithms. Those algorithms tend to assume that you have all of your params unrolled into a big long vector.

Lecturer's Note:

Implementation Note: Unrolling Parameters

With neural networks, we are working with sets of matrices:

In order to use optimizing functions such as "fminunc()", we will want to "unroll" all the elements and put them into one long vector:

If the dimensions of Theta1 is 10x11, Theta2 is 10x11 and Theta3 is 1x11, then we can get back our original matrices from the "unrolled" versions as follows:

To summarize: